COVID-19 and the limits of democracy?

Use of Artificial Intelligence to surveil COVID-19 measures

Ekaterina Jussupow and Armin Heinzl, University of Mannheim

To what extent may technology interfere with the privacy and freedom of individuals?

In Germany, the use of tracking apps is being discussed in order to better understand the course of COVID-19 disease and to prevent infection. In other countries, however, more drastic measures have already been taken. China and Russia, for example, are using artificial intelligence (AI) algorithms to provide real-time information about violations of quarantine measures. The algorithms use neural networks to recognize faces and then match them with a known database (such as passport photos of quarantined citizens). Chinese companies have already developed such algorithms to the point where they are able to recognize people wearing face masks (Reuters). However, little is known about the accuracy of such algorithms used against COVID-19. On the other hand, reports such as the BBC about the use of such algorithms in Moscow illustrate the drastic impact on individual’s freedom when, within minutes of leaving the house, police was informed of the quarantine breach.

Before COVID-19, we discussed the ethical and moral implications of AI systems, the existence of errors in the algorithms, and the fact that these algorithms interfere too much with our lives (Rahwan et al., 2019): They control what information we see on social media, are able to detect diseases on CT images, or decide whether an applicant fits the job advertisement. Only recently has science begun to discuss what side effects result from this and how such algorithms create new ways to control human behavior (Kellogg, Valentine and Christin, 2020). In times of the COVID 19 crisis, many concerns seem to have been forgotten - too great a threat from this novel pandemic. All of a sudden there is talk in Germany of a nationwide collection of personal data with the aim of stopping the spread of the virus. But can we really speak of an increased acceptance of such technologies? What are the scientific principles underlying this?

The theory of terror management (Greenberg, Pyszczynski and Solomon, 1986) is widely used in the social sciences and especially in psychology. The theory states that the awareness of our own mortality creates the need in people to reflect on values and attitudes that protect their own worldview. This is especially true of conservative worldviews. For example, it has been shown that the terrorist attacks of 11 September led to a significantly higher approval rate for the conservative George W. Bush (Landau et al., 2004), or that the Iraq war increased the approval rate for conservative politics (Lyall and Thorsteinsson, 2007). Especially existing conservative attitudes were heightened by an awareness of mortality. But would these attitudes also have an impact on the approval of AI-based surveillance systems that encroach heavily on the privacy of individuals?

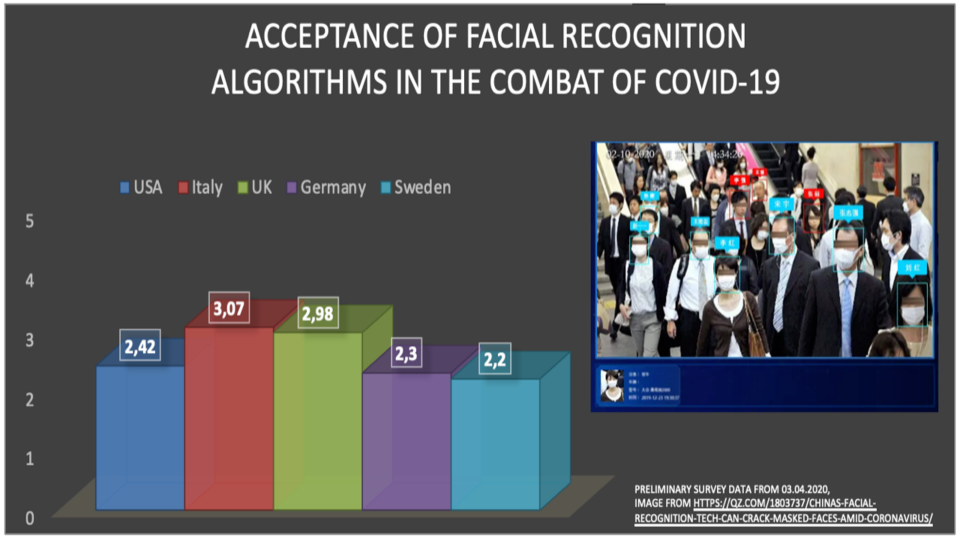

To verify this, we conducted an initial online study in early April across five countries (USA, Italy, UK, Germany and Sweden) with about 900 participants. We asked the participants to what extent they would agree with statements such as "facial recognition algorithms should monitor the behavior of all infected and potentially infected persons". For the analysis, we also considered the relative number of deaths in each respondent's country, as well as their general attitude towards conservative values and concerns about invasion of privacy. Our initial data analysis showed that indeed the perceived threat of the virus, but also the relative death rate in the country, has a strong positive influence on the acceptance of such an application of artificial intelligence. The following figure illustrates the mean values and standard deviations of agreement to use facial recognition algorithms across countries. It is noticeable that Italy in particular has relatively high approval rates, as the crisis had the strongest impact there at the time of data collection. In the UK, relatively low privacy concerns combined with the high threat of COVID-19 result in relatively high approval rates. In all countries, the subjectively perceived threat of COVID-19 contributes to an increase in approval of such algorithms (cf. illustration in the cover picture).

With these results the use of AI systems must be reflected very critically. These technologies are there and they are being used more and more often even if we have not fully discussed the ethical implications. And even if it is not yet a reality in Germany, a crisis like COVID-19 can suddenly lead to us becoming more open to restrictions on our privacy. We cannot avoid an intensive exchange between citizens and scientists so that such results lead us to reflect on why we are actually so willing to share all our data for the benefit of all and why, by contrast, we have such reservations about making our data available to our health care system. A crisis such as COVID-19 invites social reflection and we should make conscious decisions about how to deal with AI technology in the long term. We should also be aware that our approval or rejection of such technologies is not based on rational considerations (see also Jussupow, Spohrer, Heinzl and Link, 2018), but can also be influenced by social movements.

Literature

Greenberg, J., T. Pyszczynski and S. Solomon. (1986). “The Causes and Consequences of a Need for Self-Esteem: A Terror Management Theory.” In: Public Self and Private Self.

Jussupow, E., K. Spohrer, A. Heinzl and C. Link. (2018). “I am; We are - Conceptualizing Professional Identity Threats from Information Technology.” In: Proceedings of the International Conference on Information Systems - Bridging the Internet of People, Data, and Things. San Francisco.

Kellogg, K. C., M. A. Valentine and A. Christin. (2020). “Algorithms at work: The new contested terrain of control.” Academy of Management Annals, 14(1), 366–410.

Landau, M. J., S. Solomon, J. Greenberg, F. Cohen, T. Pyszczynski, J. Arndt, … A. Cook. (2004). “Deliver us from evil: The effects of mortality salience and reminders of 9/11 on support for President George W. Bush.” Personality and Social Psychology Bulletin, 30(9), 1136–1150.

Lyall, H. C. and E. B. Thorsteinsson. (2007). “Attitudes to the Iraq war and mandatory detention of asylum seekers: Associations with authoritarianism, social dominance, and mortality salience.” Australian Journal of Psychology, 59(2), 70–77.

Rahwan, I., M. Cebrian, N. Obradovich, J. Bongard, J. F. Bonnefon, C. Breazeal, … M. Wellman. (2019). “Machine behaviour.” Nature.